Compass projects lower qualifying scores for the Class of 2027

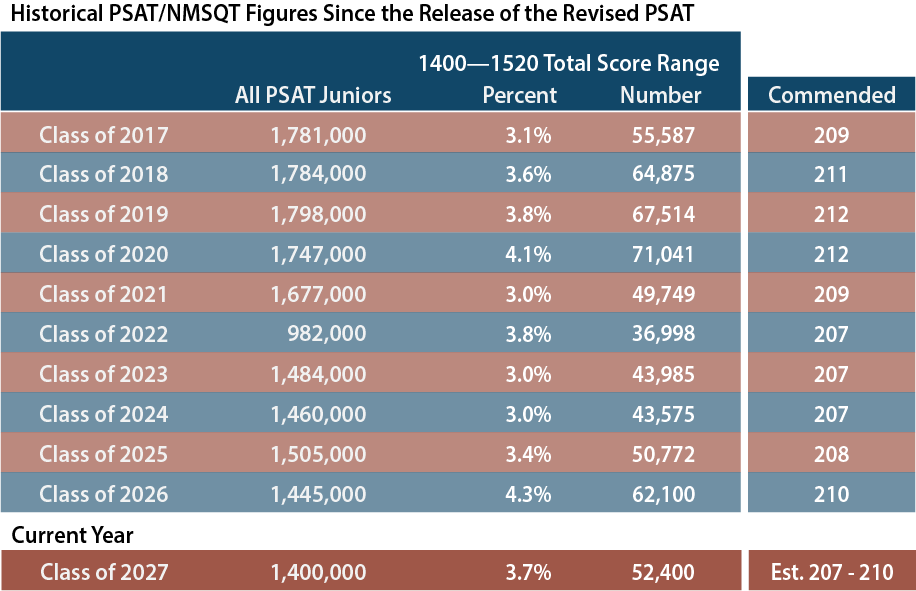

The junior year PSAT is also the NMSQT — the National Merit Scholarship Qualifying Test. Each year, National Merit recognizes the top 57,000 test takers. In order to be included in that group, students need to be among the top 4% of the 1.4 million students who take the PSAT. Of that elite group, approximately 17,000 are recognized as Semifinalists for having the highest scores within their states. Semifinalists have the opportunity to continue in the program to become Finalists and, potentially, scholarship recipients. The remaining 40,000 students are recognized as Commended Students. More information can be found in our National Merit Explained post.

For the Class of 2027, the exact scores needed to qualify (“cutoffs”) will not be released until September 2026 (the Commended cutoff is likely to leak in April). In order to give students context for their test results, Compass has analyzed the scores from the October 2025 to produce estimates for the national Commended cutoff and the individual state cutoffs. We predict that the majority of state cutoffs will decline this year.

National Merit uses the Selection Index (SI), found on the PSAT score report, to rank students. The Selection Index can be calculated by doubling the Reading & Writing score (RW), adding the Math score (M), and dividing that sum by 10. For example, a student with a 720 RW and 700 M score has a Selection Index of 214 — (720 x 2 + 700)/10. The weighting of the index means that not all students with a 1420 Total Score have the same SI. A student with a 700 RW and 720 M has a Selection Index of 212. The highest SI a student can receive is 228 (760 RW and 760 M). Last year, the Commended cutoff was 210, and Semifinalist cutoffs ranged from 210 to 225 depending on the competitiveness of the state.

Why do we foresee lower cutoff scores?

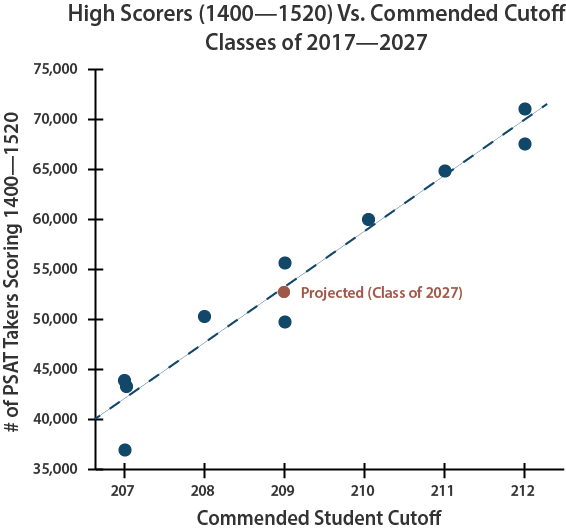

College Board does not release any information about the distribution of Selection Indexes, but it does produce summaries of broad score ranges. The number of students in the 1400 – 1520 Total Score range has proved to be an excellent indicator of the Commended cutoff. The Class of 2027 saw 16% fewer top scores than did last year’s class. The 52,400 students at 1400 and above is closer to what we saw two years ago with the Class of 2025 and, going back further, similar to the results for the Classes of 2017 and 2021.

Most of the students who will qualify for National Merit recognition fall in the 1400 – 1520 band (or just outside it). With approximately 10,000 fewer students in the top band of scores this year, we expect the Commended level to drop to 208 or 209. The chart below shows the historical relationship between high scores and the Commended cutoff.

Explaining why last year’s cutoffs reached record levels

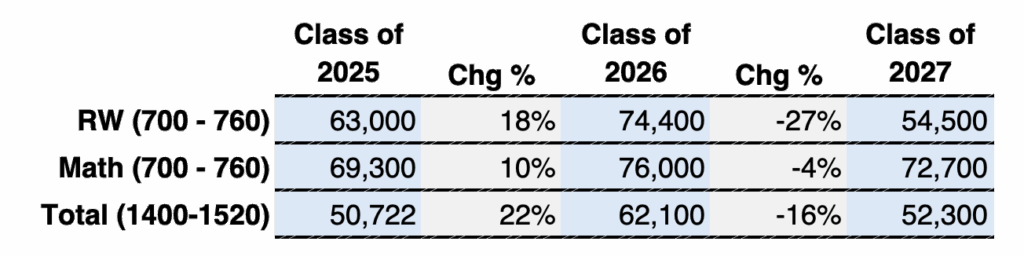

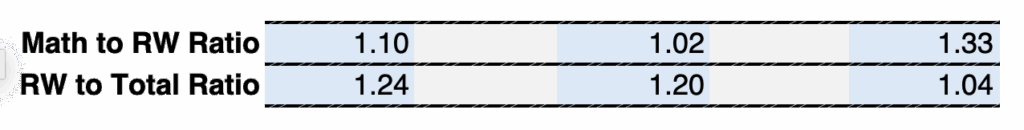

After examining this year’s numbers, we also have a better understanding of why Semifinalist cutoffs jumped to such extreme levels last year, and why it should not happen again. The 2024 PSAT saw an unprecedented 18% jump in the number of high Reading & Writing scores (700-760). The weighting of RW in the Selection Index magnified the impact of that change. The table below shows scores for the last three years and how results have fluctuated.

On the 2025 PSAT, the number of high RW scores dropped by 27%. In fact, the RW count for this year is even lower than it was two years ago. The decline in RW scores could even produce Selection Indexes lower than those in the Class of 2025 — at least in some states. The Commended cutoff may provide additional insight in the spring.

The ratio of 700+ M scores to 700+ RW scores reveals just how bizarre last year’s spike was. Traditionally, Math scores have higher distributions at the extremes. There are more very high Math scores on the PSAT and SAT, and there are more very low Math scores.

On the October 2024 PSAT, though, almost as many students scored 700-760 on RW as did on Math. The ratio of high Math scores to high RW scores was 1.02. This helps explain why some cutoffs went as high as 224 and 225 for the first time ever. Students achieved 750 and 760 RW scores in record numbers. In most years, there would be more students scoring 730 RW and 760 M (222 SI), for example, than scoring 760 RW and 730 M (225 SI). That was not true in the the Class of 2025, where there was a more equal distribution. It appears that scores for the Class of 2027 have returned to the traditional Math-heavy distribution. The ratio of high Math scores to high RW scores was 1.33 on the 2025 PSAT/NMSQT. For this reason, we don’t believe we will see a repeat of the 224 and 225 cutoffs seen last year.

Was last year a fluke, shift, or a trend?

The results from the Class of 2027 have answered one of the nagging questions from last year: Were the high cutoffs a fluke, shift, or trend? The answer appears to be fluke. More accurately, results could be labeled an error in test construction and scaling. There is no evidence that the Class of 2026 possessed special test-taking skills. As sophomores, that class performed in line with expectations on the 2023 PSAT. There is no evidence that the Class of 2027 has diminished skills. As sophomores, the class saw the same inflated scores as did the juniors on the 2024 PSAT. The best explanation is that College Board lost control of the scale. College Board dramatically shortened the PSAT when it switched from paper to digital. On the paper test, the Reading and Writing sections had 91 questions, and students were given 95 minutes. The RW on the digital PSAT is truncated to 54 questions over 64 minutes. And only 50 of the questions count toward a student’s score! A shorter exam means a less reliable exam. We saw the occasional wild ride on the paper PSAT, but the rides may get wilder and more frequent on the digital PSAT.

We anticipate the majority of state Semifinalist cutoffs to decline for the Class of 2027. Knowing this overall trend, however, doesn’t tell us which states will be in that majority or how much lower scores will go. The table below provides a Most Likely cutoff score, but the more useful information is the Estimated Range. Almost all cutoffs should fall within that range.

State | Class of 2027 (Most Likely) | Class of 2027 (Est. Range) | Class of 2026 (Actual) | Class of 2025 (Actual) | Class of 2024 (Actual) | Avg NMSFs |

|---|---|---|---|---|---|---|

| Alabama | 213 | 210 - 216 | 214 | 212 | 210 | 250 |

| Alaska | 214 | 210 - 216 | 215 | 214 | 209 | 35 |

| Arizona | 218 | 215 - 220 | 218 | 217 | 216 | 398 |

| Arkansas | 213 | 210 - 216 | 215 | 213 | 210 | 143 |

| California | 223 | 220 - 224 | 224 | 221 | 221 | 2,115 |

| Colorado | 218 | 216 - 221 | 219 | 218 | 216 | 286 |

| Connecticut | 222 | 220 - 223 | 223 | 221 | 221 | 175 |

| Delaware | 219 | 218 - 221 | 220 | 219 | 219 | 44 |

| Florida | 217 | 216 - 220 | 219 | 217 | 216 | 999 |

| Georgia | 219 | 217 - 221 | 220 | 218 | 217 | 602 |

| Hawaii | 218 | 215 - 220 | 219 | 217 | 217 | 62 |

| Idaho | 214 | 211 - 217 | 215 | 213 | 211 | 96 |

| Illinois | 220 | 218 - 222 | 222 | 220 | 219 | 704 |

| Indiana | 217 | 214 - 219 | 218 | 217 | 216 | 313 |

| Iowa | 213 | 211 - 216 | 214 | 212 | 210 | 145 |

| Kansas | 216 | 213 - 219 | 216 | 215 | 214 | 144 |

| Kentucky | 214 | 211 - 217 | 214 | 213 | 211 | 201 |

| Louisiana | 215 | 212 - 218 | 216 | 214 | 214 | 222 |

| Maine | 215 | 212 - 217 | 217 | 214 | 213 | 55 |

| Maryland | 223 | 221 - 225 | 224 | 222 | 221 | 308 |

| Massachusetts | 223 | 221 - 225 | 225 | 223 | 222 | 318 |

| Michigan | 219 | 216 - 220 | 220 | 218 | 217 | 485 |

| Minnesota | 218 | 216 - 220 | 219 | 217 | 216 | 279 |

| Mississippi | 213 | 210 - 215 | 213 | 212 | 209 | 155 |

| Missouri | 216 | 213 - 218 | 217 | 215 | 214 | 289 |

| Montana | 211 | 208 - 214 | 213 | 209 | 209 | 47 |

| Nebraska | 213 | 210 - 216 | 214 | 211 | 210 | 105 |

| Nevada | 214 | 211 - 217 | 214 | 214 | 211 | 168 |

| New Hampshire | 217 | 214 - 219 | 219 | 217 | 215 | 60 |

| New Jersey | 223 | 222 - 225 | 225 | 223 | 223 | 451 |

| New Mexico | 211 | 208 - 214 | 210 | 211 | 207 | 104 |

| New York | 221 | 219 - 223 | 223 | 220 | 220 | 1,012 |

| North Carolina | 219 | 216 - 221 | 220 | 218 | 217 | 510 |

| North Dakota | 210 | 207 - 212 | 210 | 210 | 207 | 30 |

| Ohio | 218 | 215 - 220 | 219 | 217 | 216 | 538 |

| Oklahoma | 212 | 208 - 214 | 212 | 211 | 208 | 204 |

| Oregon | 218 | 215 - 220 | 219 | 216 | 216 | 188 |

| Pennsylvania | 220 | 217 - 222 | 221 | 219 | 219 | 596 |

| Rhode Island | 217 | 214 - 220 | 219 | 217 | 215 | 47 |

| South Carolina | 214 | 210 - 217 | 215 | 214 | 209 | 236 |

| South Dakota | 210 | 207 - 213 | 211 | 208 | 209 | 42 |

| Tennessee | 218 | 215 - 219 | 219 | 217 | 217 | 319 |

| Texas | 221 | 218 - 222 | 222 | 219 | 219 | 1,623 |

| Utah | 212 | 210 - 216 | 213 | 211 | 209 | 196 |

| Vermont | 215 | 211 - 217 | 216 | 215 | 212 | 28 |

| Virginia | 222 | 220 - 224 | 224 | 222 | 219 | 437 |

| Washington | 222 | 220 - 224 | 224 | 222 | 220 | 348 |

| West Virginia | 209 | 207 - 212 | 210 | 209 | 207 | 64 |

| Wisconsin | 214 | 213 - 217 | 215 | 214 | 213 | 292 |

| Wyoming | 209 | 207 - 212 | 210 | 209 | 207 | 24 |

| District of Columbia | 223 | 222 - 225 | 225 | 223 | 223 | 36 |

| Territories | 209 | 207 - 211 | 210 | 208 | 207 | 39 |

| Outside US | 223 | 222 - 225 | 225 | 223 | 223 | 86 |

| Commended | 209 | 207 - 210 | 210 | 208 | 207 |

How cutoffs are determined

Qualifying scores (“cutoffs”) are not based on the total score for the PSAT (360-1520) but on the Selection Index, which is calculated by doubling the RW score, adding the Math score, and then dividing the sum by 10. The maximum Selection Index is 228. Students can find a historical set of cutoff data here or see how Semifinalist and Commended counts have changed state by state.

We estimate that the Semifinalist cutoffs will range from 209 to 223. Semifinalists are allocated by state, and cutoffs are calculated by state. If Florida is allocated 1,000 Semifinalists based on its population of high school graduates, then NMSC works down from a perfect 228 Selection Index until it gets as close as possible to that target. Last year, 1,008 students scored at or above the cutoff of 219. A cutoff of 220 would have produced too few Semifinalists. A cutoff of 218 would have gone over the allocation. Because score levels can get crowded, it is easy for cutoffs to move up or down a point even when there is minimal change in testing behavior or performance.

Why does each state have its own Semifinalist cutoff if the program is NATIONAL Merit?

This is always a hot button question. NMSC allocates the approximately 17,000 Semifinalists among states based on the number of high school graduates. That way, students across the nation are represented. It also means that there are very different qualifying standards from state to state. A Massachusetts student with a 220 might miss out on being a Semifinalist. If she lived 10 miles away in New Hampshire, she would qualify.

No Semifinalist cutoff can be lower than the national Commended level. Cutoffs for the District of Columbia and for U.S. students studying abroad are set at the highest state cutoff (typically New Jersey). The cutoff for students in U.S. territories and possessions falls at the Commended level each year. Boarding schools are grouped by region. The cutoff for a given region is the highest state cutoff within the region.

Change is always the theme

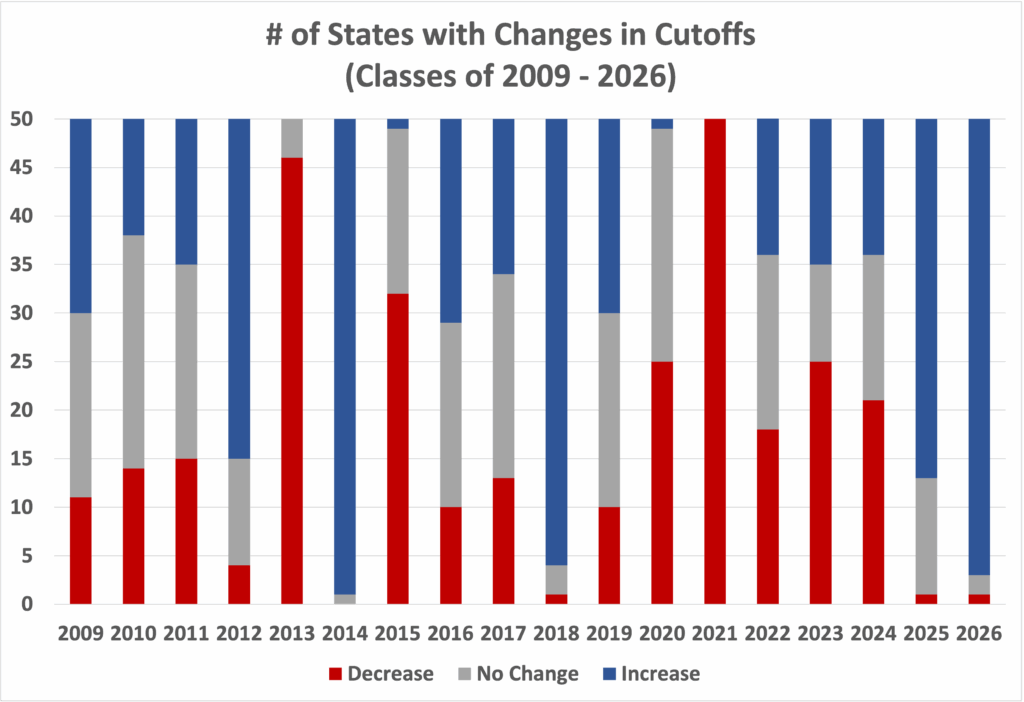

Over the last two decades, at least half of the state cutoffs have changed each year. In some years, as many as 49 states saw ups or downs, usually because of questionable test forms. In addition to last year’s exam, the PSATs from 2011 (Class of 2013), 2016 (Class of 2018), and 2019 stand out as problematic. The anomalous 2019 results could be traced back to a particularly mis-scaled form, which I wrote about at the time. The Class of 2014 also saw significant changes, but those were more of a bounce-back from the previous year. The question for the Class of 2027 is how much of a bounce-back will be seen this year.

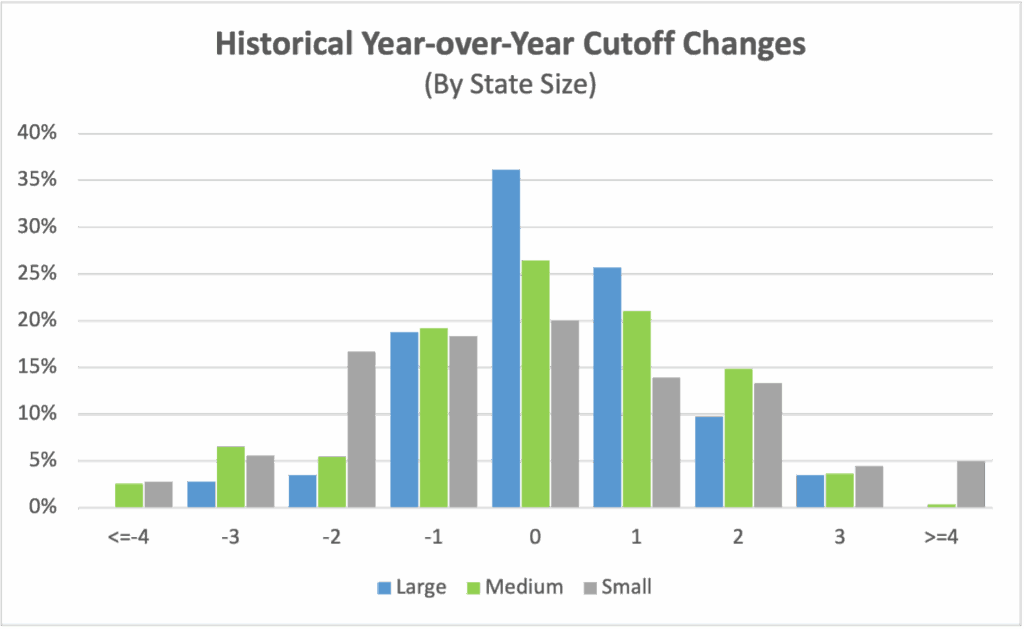

Cutoffs are particularly bumpy in states with smaller pools of test takers and National Merit Semifinalists. Over the last dozen years, cutoffs in the 12 largest states have remain unchanged 36% of the time, while the cutoffs in the smallest states have remain unchanged only 1 time out of every 5. No large state’s cutoff has jumped by more than 3 points in a year, whereas 6-point changes have occurred in the pool of smaller states.

When are National Merit Semifinalists announced?

The lists of Semifinalists will not be distributed to high schools until the end of August 2026. With the exception of homeschoolers, students do not receive direct notification. NMSC asks that schools not share the results publicly until the end of the press embargo in mid-September, but schools are allowed to notify students privately before that date. NMSC does not send Commended Student letters to high schools until mid-September. Compass will keep students updated on developments as the dates approach.

Do state and national percentiles indicate whether a student will be a National Merit Semifinalist?

No! Approximately 1% of test takers qualify as Semifinalists each year, so it is tempting to view a 99th percentile score as indicating a high enough score — especially now that College Board provides students with percentiles by state. There are any number of flaws that rule out using percentiles as a quick way of determining National Merit status.

- Percentiles are based on section scores or total score, not Selection Index

- Percentiles are rounded. There is a large difference, from a National Merit perspective, between the top 0.51% and the top 1.49%

- Percentiles reveal the percentage of students at or below a certain score, but the “at” part is important when NMSC is determining cutoffs.

- The number of Semifinalists is based on the number of high school graduates in a state, not the number of PSAT takers. Percentiles are based on PSAT takers. States have widely varying participation rates.

- Most definitive of all: Percentiles do not reflect the current year’s scores! They are based on the prior 3 years’ performance. They are set even before the test is given. And if you are going to use prior history, why not use the record of prior National Merit cutoffs rather than the highly suspect percentiles?

Entry requirements for National Merit versus qualifying for National Merit.

Your PSAT/NMSQT score report tells you whether you meet the eligibility requirements for the NMSP. In general, juniors taking the October PSAT are eligible. If you have an asterisk next to your Selection Index, it means that your answers to the entrance questions have made you ineligible. Your answers are conveniently noted on your score report. If you think there is an error, you will also find instructions on how to contact NMSC. Meeting the eligibility requirements simply means that your score will be considered. Approximately 1.4 million students enter the competition each year. Only about 55,000 students will be named as Commended Students, Semifinalists, Finalists, or Scholars. See National Merit Explained for more information.

Received Semi Finalist notification in Arizona with a 221

Congratulations to you and your student, and thanks for sharing!

Hi! Do you know if that was the cutoff?

Art,

Is it possible that there is some differentiation between PSAT SI and Alt Entry SI this year? Or difference in SI based on Verbal/Math SI combination this year?

I know it sounds crazy, but I am seeing conflicting information for 220 CA, 219 TX and 215 OH on other sites.

Has misinformation been normal early on (in prior years)?

Mina,

The SI calculation is sacrosanct, so I’m sure that’s not the issue. It’s not impossible that NMSC decided to distinguish between PSAT SI and AE SI, but it’s a stretch. SAT scores don’t come with a nice Selection Index tab, so it’s also possible that some AE reporters have miscalculated their SIs. Things are always coming fast and furious around this time, so conflicting information is not that unusual. I’m a broken record on the topic, but I wish National Merit would join the 21st century and notify students electronically.

Do you know of people that got in with 220 in CA

Aaron,

I do not. So far I’ve had the report of a student missing out at 220.

Was this AE or PSAT? I think from the conflicting information it might be reasonable that they distinguished.

Hi, Art. Chances for a 221 in TX? Heard nothing yet. I hear that a 219 made it on some sites, but I hear that a 219 did not make it on other sites. On pins and needles!

I wish we had something definitive. Based on some of the reports out of high-cutoff states, I think you’re solid with a 221 in Texas.

How about 220 in TX

I’ve seen mixed reports elsewhere about Texas. A 220 is still on the bubble.

I got the letter. PSAT@220.

That’s great news, Bryan. Thanks for the confirmation.

One of my friends made it (confirmed) with a 221 in Texas

Thanks, AM!

To give you a start on Washington, a 222 was confirmed as SF today for my friend’s kiddo.

Thank you for passing that along, Shannon.

Anything about Michigan yet?

Nothing directly, Jakub.

Have you heard anything from MN? My son is a 221. His school hasn’t heard which I think is a bad sign.

Not directly. A state hitting a new record in a rough year would be a very unusual circumstance. A school not yet receiving NM information or having it in the wrong person’s mailbox, on the other hand, is almost standard operating procedure at this time of year. Don’t let the anxiety win!

Do we have a rough estimate of how many Semifinalists there are this year, with all the Alternate Entrants?

Russ,

Since NMSC used Alternate Entry SAT scores in calculating cutoffs (see Comment above yours), the total number of SFs will probably not change much. I’d estimate 16,500 – 17,000. Their letters refer to 16,000, but they are infamous for using round and outdated numbers in those circumstances. In any event, no substantial change.

Thanks Art!

Two things:

1. I’m not sure which comment you’re referring, could you point it out?

2. I thought that Alternate Entry scores were typically not used to determine cutoffs? Has this changed? I expected far more than 16-17,000 Semifinalists this year, so am surprised to hear this.

Russ,

The threading on this blog is horrible, sorry. It’s from David at 6:07am today. You are correct that AE scores were not *typically* used to determine cutoffs. Apparently NMSC decided that keeping that policy in such an atypical year would lead to crazy anomalies (I’ve previously written about the ridiculous cutoffs it would have created in California, for instance). So AE scores were considered in calculating cutoffs.

Has there been any information on the maine cutoff.

Nothing. Sorry, Kai.

Art,

My son qualified from Kansas with 217. Don’t know the score of other Kids who qualified from his school

Congratulations to your con, Anu. I just got a separate report of a 215 qualifying in Kansas, too.

Hi Art. 215 qualified in Kansas.

Thanks, Kevin! I imagine congratulations are in order.

I can confirm that 215 qualifies in KS, but I don’t believe a 213 did, so it should be 214-215.

Thank you, Lincoln.

Any updates this morning? Specifically looking for information on Indiana. Thanks so much!

Mindi,

I’ve seen reports of 211 elsewhere on the web, but I don’t have any direct reports.

Thank you. Sitting at 213 over here. I guess we will keep hope for a little longer 🙂 Much appreciated.

How likely is it that Missouri will fall to a 214 cutoff? It is currently estimated at 215 and I scored a 214.

Kyle,

215 is already low for Missouri, but I’d hold out hope.

My California student with a 221 was notified yesterday that she made SF. Curiously, the same day we received a recruitment mailer from U. of Oklahoma targeted to parents of Semi-Finalists. How is it that they knew before we did?

Parent,

Congratulations! And thanks for confirming that 221 qualified in CA. College Board typically sells lists based on score breaks and not on NM status. It’s possible that NMSC sells the list to Oklahoma, but it’s also possible that Oklahoma is simply buying a cross-section of names at >1450 (or similar).

How likely is NJ at 222? I saw one reddit post claiming he got notified with a 221 index, but nothing more.

I saw that, too, njparent. I don’t know any more information than you do. If correct, it’s pretty important, since NJ has traditionally set the high-water mark for the country. I’d be cautiously optimistic at 222.

Many many schools here cancelled the psat, so I do think a drop is possible. Depends how alternate entry ended up being treated.

Son got his letter today. 222 qualified for NMSF in NJ.

Congratulations, NJP! If your son has any friends with 220s or 221s, please encourage them to stop by with news.

Hi Art,

This is just a clarification, but Puerto Rico is considered a territory right? I have a 216 SI so I can’t afford to have it considered outside of US. Thanks!

Ross,

That’s right. And you’ll qualify as a Semifinalist. Congratulations!

Hi Art,

I’m a little confused why people are comparing this year’s cutoffs with Class of 2021 cutoffs since you’ve methodically shown that the Class of 2021 cutoffs were outliers or anomalies. Shouldn’t we be comparing this year’s cutoffs with Class of 2020 or Class of 2019 cutoffs (or some kind of average)? My prediction is that most state cutoffs will be one point less (or more) than the Class of 2020 cutoffs. Given that logic, what are the odds that Texas will come in at 220? Thanks.

Nascil,

You make a good point. It’s natural, though, for people to have a “recency bias” and think about the most recent set of cutoffs. The fact is that any comparison is a shot in the dark this year. The word unprecedented gets overused, but I think a year when there is a January PSAT and NMSC has to change their rules about Alternate Entry can safely be called unprecedented. That’s why I’m hesitant to lay odds for or against a score close to the bubble. Hopefully we find out soon enough.

Hello Art!

What is the Ohio cutoff and how accurate do you think it is. Should I hold up hope if I have a 215 SI?

Thanks!

Riya,

Based on reports, the Ohio cutoff is 215 or lower. Those reports usually prove accurate, so I’d be cautiously optimistic!

Art,

Thank you so much for your updates. My so.n qualified with a 223 in TX. We just received an email from the school.

Congratulations to your son, TM!

What are the chances a 219 qualifies in Georgia?

Abhinav,

There is not a lot to go on at this point. I think GA is likely to end up at 219 or 220. Good luck!

Do you see Kansas dropping to 213? It would be a one point drop from last year so would it be potentially feasible?

Nate,

Yes, I think it’s possible.

Should I still maintain some hope for 220 in CA. Some have stated they got it, others said no they haven’t got anything.

Steve,

Do you mean “got it” in that they received an NMSF letter with a 220 score or do you just mean that some California students have already received notifications? I haven’t personally heard of the former, but that’s not to say it didn’t happen. I’d hold out hope until we get more clarity!

I’ve heard that 220 qualified based on home school letter from the same dude that leaked 217 of Florida but I haven’t heard back from the parent who said 220 didn’t make it. The same person that said 220 here, also said on college confidential and Reddit. I’ve contacted them for answers but haven’t received anything

Thanks, Steve. I’m going to update the post with some of this information.

Hello Art,

I also have a quick question on California. I see ‘California 221 or 222’ instead of ‘<=222'. Is there any specific reason? Thanks!

Anon,

I got a report from a student (parent) with a 220 who did not qualify. I’m adjusting the post so as not to be misleading. Hopefully we hear from more Californians.

John,

My info comes from a school that may have had no one with a 218, so it’s possible the reports of a 218 qualifying are correct.

Texas is confirmed 220.

Thanks, Anon. That will be a relief to many.

Mississippi – semifinalist status is above 212 (so 213 or above). One person reported being confirmed as to not getting it while having a 212.

Reported on r/psat

Thanks!

So is 213 still in play

Hi Art, seems weird that CA, with the most number of SF hasn’t leaked more – is this your experience? Do you have a lot of confirmations of that 222 and we are just hoping for a 221 to pop up (we are, LOL!) I can’t see the message about them adding the AE for finalist number but am wondering if that, too, is confirmed? Thanks so much

It’s frustrating — especially since I live in California — but not that unusual. No, there is nothing to make me think it’s 222. In fact, there are conflicting reports even about 220. We may just have to wait a bit longer.